[WIP] How GraphQL Ruby parses queries

![[WIP] How GraphQL Ruby parses queries](/content/images/size/w2000/2019/06/tian-kuan-9AxFJaNySB8-unsplash.jpg)

Recently I started making small contributions to graphql-ruby. This PR to fix a parsing bug got me acquainted with the lexing and parsing of GraphQL queries in Ruby.

On GraphQL Ruby's site, there is documentation on Phases of execution. I hope to do an overview of the Lex and Parse phases, which is not written in Ruby but generates Ruby code 🤯.

I wrote this assuming the reader is just as ignorant as me about compilers. If you spot any inaccuracies in the article, please let me know. If you like to try the code samples in this post, just clone GraphQL-ruby and setup bundle console.

$ git clone https://github.com/rmosolgo/graphql-ruby

$ cd graphql-ruby

$ bundle

$ bundle console # In the console, GraphQL.parse should be availableLexing

Lexical analysis, is the process of converting a sequence of characters into tokens.

When GraphQL.parse is called, GraphQL Ruby lexes the incoming query as the first step. Let's just give the tokenizer a go.

# In a bundle console

query = <<~GRAPHQL

query myNamedOperation($input: SomeInputObject!) {

getProducts(input: $input) {

id

}

}

GRAPHQL

tokens = GraphQL::Language::Lexer.tokenize(query)

# => [(QUERY "query" [1:1]), (IDENTIFIER "myNamedOperation" [1:7]), (LPAREN "(" [1:23]), (VAR_SIGN "$" [1:24]), (INPUT "input" [1:25]), (COLON ":" [1:30]), (IDENTIFIER "SomeInputObject" [1:32]), (BANG "!" [1:47]), (RPAREN ")" [1:48]), (LCURLY "{" [1:50]), (IDENTIFIER "getProducts" [2:3]), (LPAREN "(" [2:14]), (INPUT "input" [2:15]), (COLON ":" [2:20]), (VAR_SIGN "$" [2:22]), (INPUT "input" [2:23]), (RPAREN ")" [2:28]), (LCURLY "{" [2:30]), (IDENTIFIER "id" [3:5]), (RCURLY "}" [4:3]), (RCURLY "}" [5:1])]This might look messy, but let's just take a look at one token.

tokens[6]

# => (IDENTIFIER "SomeInputObject" [1:32])

# name: IDENTIFIER

# value: "SomeInputOject"

# col: 32

# line: 1 It essentially contains the name, value and the text position of the token. If you mapped the names, here's how the query looks like now:

# => [:QUERY, :IDENTIFIER, :LPAREN, :VAR_SIGN, :INPUT, :COLON, :IDENTIFIER, :BANG, :RPAREN, :LCURLY, :IDENTIFIER, :LPAREN, :INPUT, :COLON, :VAR_SIGN, :INPUT, :RPAREN, :LCURLY, :IDENTIFIER, :RCURLY, :RCURLY]Ragel is used to built the Lexer function.

Ragel compiles executable finite state machines from regular languages. Ragel state machines can not only recognize byte sequences as regular expression machines do, but can also execute code at arbitrary points in the recognition of a regular language.

This might sound complicated but essentially Ragel is used here to build a program that processes the query string and emits tokens according to a set of rules. At this point, it doesn't care if you have a well formed GraphQL query.

Let’s look at the code from lexer.rl.

%%{

machine graphql_lexer;

IDENTIFIER = [_A-Za-z][_0-9A-Za-z]*;

NEWLINE = [\c\r\n];

BLANK = [, \t]+;

COMMENT = '#' [^\n\r]*;

INT = '-'? ('0'|[1-9][0-9]*);

FLOAT_DECIMAL = '.'[0-9]+;

FLOAT_EXP = ('e' | 'E')?('+' | '-')?[0-9]+;

FLOAT = INT FLOAT_DECIMAL? FLOAT_EXP?;

ON = 'on';

FRAGMENT = 'fragment';

TRUE = 'true';

...

main := |*

INT => { emit(:INT, ts, te, meta) };

FLOAT => { emit(:FLOAT, ts, te, meta) };

ON => { emit(:ON, ts, te, meta) };

FRAGMENT => { emit(:FRAGMENT, ts, te, meta) };

TRUE => { emit(:TRUE, ts, te, meta) };

FALSE => { emit(:FALSE, ts, te, meta) };

NULL => { emit(:NULL, ts, te, meta) };

QUERY => { emit(:QUERY, ts, te, meta) };

MUTATION => { emit(:MUTATION, ts, te, meta) };

SUBSCRIPTION => { emit(:SUBSCRIPTION, ts, te, meta) };

...

%%}

def self.emit(token_name, ts, te, meta)

meta[:tokens] << token = GraphQL::Language::Token.new(

name: token_name,

value: meta[:data][ts...te].pack(PACK_DIRECTIVE).force_encoding(UTF_8_ENCODING),

line: meta[:line],

col: meta[:col],

prev_token: meta[:previous_token],

)

meta[:previous_token] = token

# Bump the column counter for the next token

meta[:col] += te - ts

endEssentially, the Lexer converts characters sequences from the query into tokens. It also does other important things like standardizing, removing non significant spaces/characters.

An interesting thing is to look at how it deals with number representations.

GraphQL::Language::Lexer.tokenize('34.5 10 "5"')

# => [(FLOAT "34.5" [1:1]), (INT "10" [1:6]), (STRING "5" [1:9])]Now that we have an array of tokens, we can start parsing them.

The Parsing Step

Next, graphql-ruby uses Racc to parse the tokenized query.

Racc (Ruby yACC) is a LALR(1) parser generator. It is written in Ruby itself, and generates Ruby programs.

Yacc stands for Yet Another Compiler Compiler which provides a tool to produce a parser for a given grammar 🤯. For example, here's the the grammar file for ANSI C.

Let's dig into parser.y.

class GraphQL::Language::Parser

rule

target: document

document: definitions_list { return make_node(:Document, definitions: val[0])}

definitions_list:

definition { return [val[0]]}

| definitions_list definition { val[0] << val[1] }

definition:

executable_definition

| type_system_definition

| type_system_extension

executable_definition:

operation_definition

| fragment_definition

The | stands for union and kind of acts like an OR operator. So here it's basically reading:

- For a

document, it should have adefinitions_list - that has a

definitionor a list ofdefinitions(recursive) - And each definition can be an

executable_definitionor atype_system_definitionor atype_system_extension

This can go on for a few levels. But let's take a look at a smaller subset of parsing.

arguments_list:

argument { return [val[0]] }

| arguments_list argument { val[0] << val[1] }

argument:

name COLON input_value { return make_node(:Argument, name: val[0], value: val[2], position_source: val[0]) }

input_value:

literal_value

| variable

| object_valueFamiliar? The upper cased tokens are from the earlier lexing step. The lower cased ones are tokens defined in our parser.

- For an

arguments_list, it could be one argument or many (arguments_list argument). - Each argument is defined with a

name, separated by aCOLONand has aninput_value - Where the input value can be a

literal_valueorvariableorobject_value.

Once we grok this, it's actually quite easy to read! Now, the matched token values are returned in the val array. For example:

For a query string part node(id: 3) , the argument definition returns

[(IDENTIFIER "id" [1:8]), (COLON ":" [1:10]), 3]Finally, make_node is a method defined to build a node for a branch of the AST.

def make_node(node_name, assigns)

assigns.each do |key, value|

if key != :position_source && value.is_a?(GraphQL::Language::Token)

assigns[key] = value.to_s

end

end

assigns[:filename] = @filename

GraphQL::Language::Nodes.const_get(node_name).new(assigns)

endGraphQL::Language::Nodes::Argument nodeArmed with this knowledge, you can should be able to grok most of the grammar. There are a lot of shared definitions in parser.y, it's really neat.

Back to the Spec...

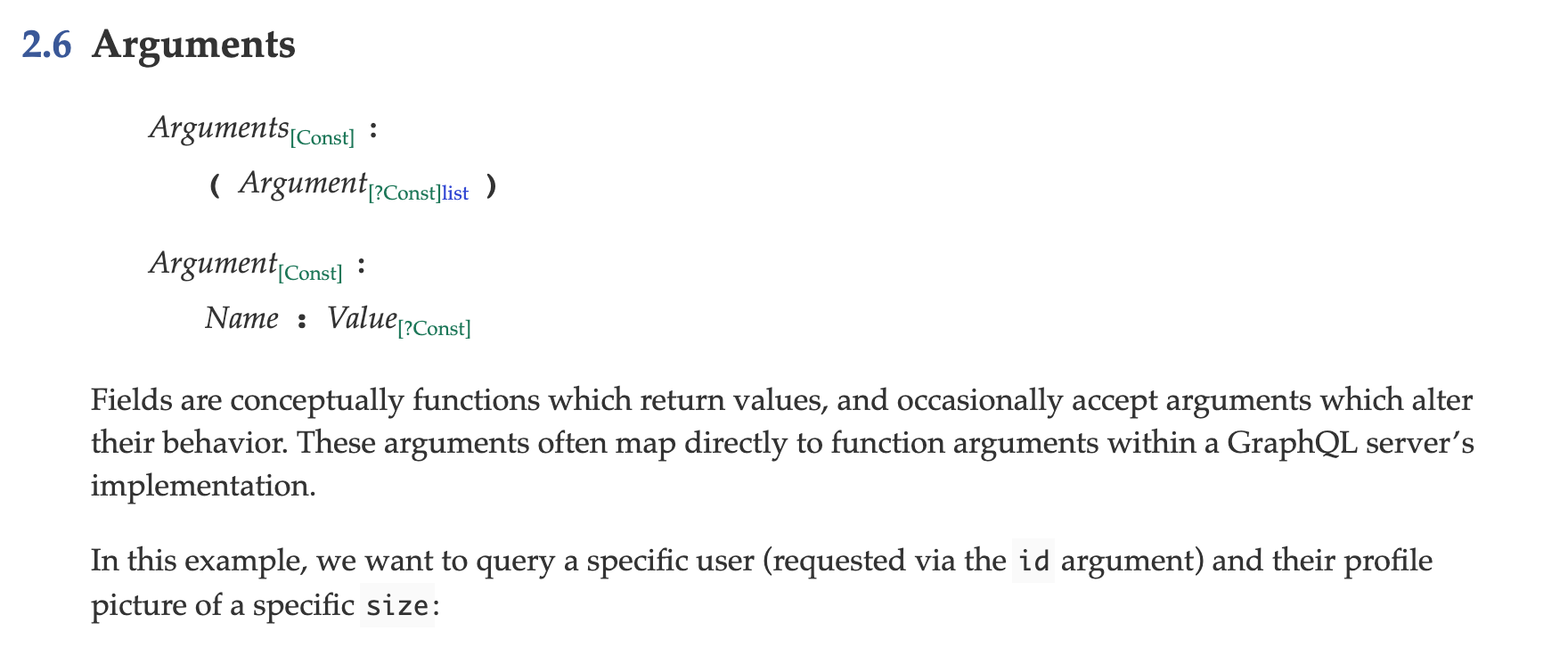

Now if you take a look the Argument definition on the GraphQL spec.

Does the grammar not look familiar to the Racc file we've been working with?

The AST Tree

TODO

- What is an AST tree?

- Why AST tree?

- How is it used in validation and execution?

Parting Words

The astute reader might also notice that to this point, we haven't really involved any parts of your domain's schema definition.

We have only parsed a given query to ensure syntactic correctness. These of concerns. The lex/parse phases are low level implementation details that most contributors won't have to deal with unless there is a spec change.

References: